Compares stacked elastic net, tuned elastic net, ridge and lasso.

cv.starnet(

y,

X,

family = "gaussian",

nalpha = 21,

alpha = NULL,

nfolds.ext = 10,

nfolds.int = 10,

foldid.ext = NULL,

foldid.int = NULL,

type.measure = "deviance",

alpha.meta = 1,

nzero = NULL,

intercept = NULL,

upper.limit = NULL,

unit.sum = NULL,

...

)Arguments

- y

response: numeric vector of length \(n\)

- X

covariates: numeric matrix with \(n\) rows (samples) and \(p\) columns (variables)

- family

character "gaussian", "binomial" or "poisson"

- nalpha

number of

alphavalues- alpha

elastic net mixing parameters: vector of length

nalphawith entries between \(0\) (ridge) and \(1\) (lasso); orNULL(equidistance)- nfolds.ext, nfolds.int, foldid.ext, foldid.int

number of folds (

nfolds): positive integer; fold identifiers (foldid): vector of length \(n\) with entries between \(1\) andnfolds, orNULL, for hold-out (single split) instead of cross-validation (multiple splits): setfoldid.extto \(0\) for training and to \(1\) for testing samples- type.measure

loss function: character "deviance", "class", "mse" or "mae" (see

cv.glmnet)- alpha.meta

meta-learner: value between \(0\) (ridge) and \(1\) (lasso) for elastic net regularisation;

NAfor convex combination- nzero

number of non-zero coefficients: scalar/vector including positive integer(s) or

NA; orNULL(no post hoc feature selection)- intercept, upper.limit, unit.sum

settings for meta-learner: logical, or

NULL(intercept=!is.na(alpha.meta),upper.limit=TRUE,unit.sum=is.na(alpha.meta))- ...

further arguments (not applicable)

Value

List containing the cross-validated loss

(or out-of sample loss if nfolds.ext equals two,

and foldid.ext contains zeros and ones).

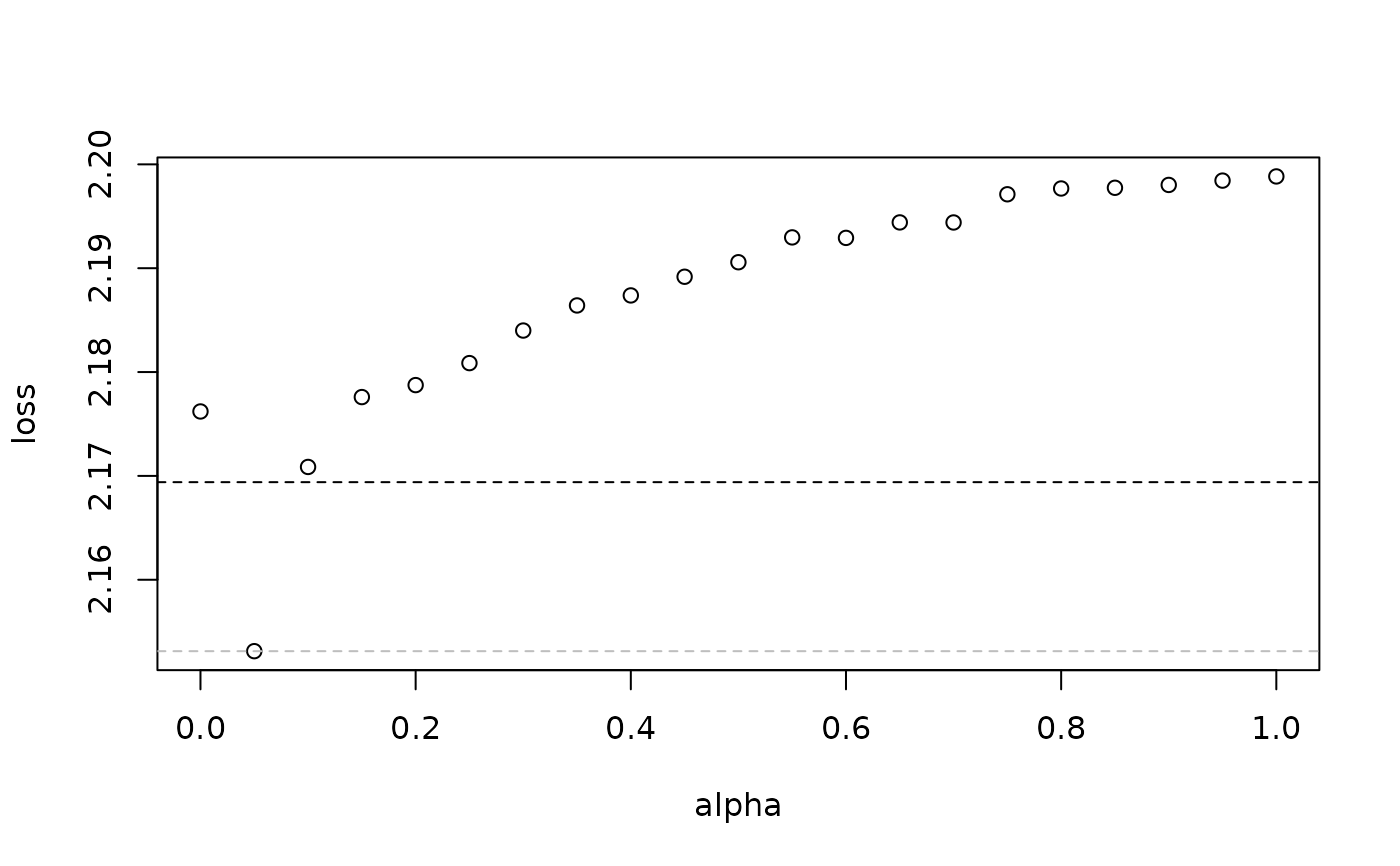

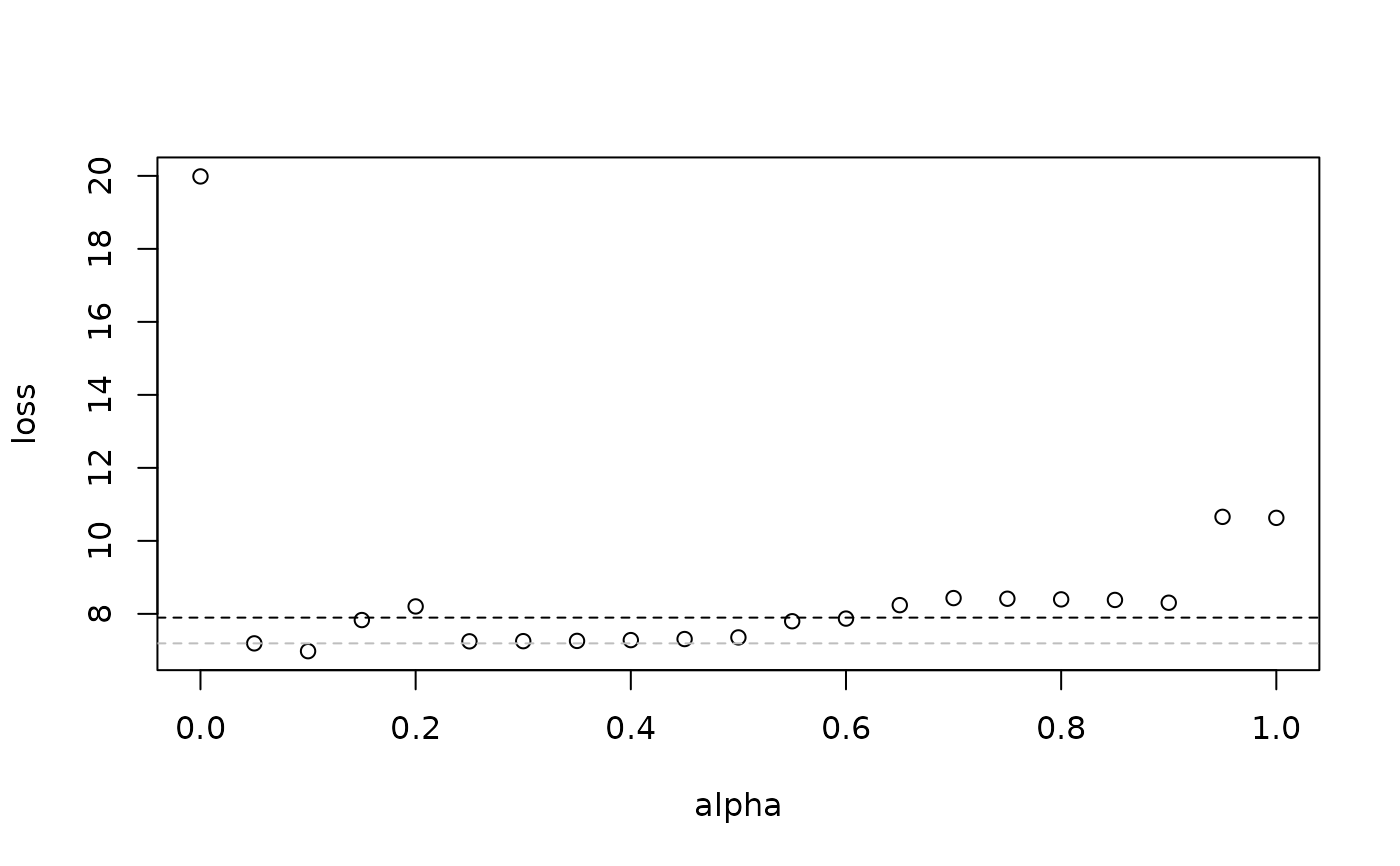

The slot meta contains the loss from the stacked elastic net

(stack), the tuned elastic net (tune), ridge, lasso,

and the intercept-only model (none).

The slot base contains the loss from the base learners.

And the slot extra contains the loss from the restricted

stacked elastic net (stack), lasso, and lasso-like elastic net

(enet),

with the maximum number of non-zero coefficients shown in the column name.

Examples

# \donttest{

loss <- cv.starnet(y=y,X=X)# }

# \donttest{

loss <- cv.starnet(y=y,X=X)# }